-

[기계학습] 5. Nearest Neighbor Method🐳Dev/Machine Learning 2021. 12. 20. 16:16

충남대학교의 김동일 교수님의 기계학습 수업을 기반으로 정리했습니다.

1. k-Nearest Neighbors Classifier (k-NN)

1. k-Nearest Neighbors Classifier (k-NN)

가장 쉽고 직관적인 알고리즘이자, "나와 비슷한 데이터가 나를 설명한다"라는 머신러닝의 기본 컨셉을 잘 설명하고 있는 알고리즘이다. k-NN에서 k는 개수를 의미하며 몇 개의 인접 이웃을 가지고 비슷함에 따라 분류를 할지를 결정한다. k-NN에서 비슷함은 거리로 결정하는데, 거리가 가까울 수록 유사하다고 가정한다.

1) Distance Metric

집합의 각 원소 쌍끼리의 거리를 구하는 거리 함수들을 알아보자.

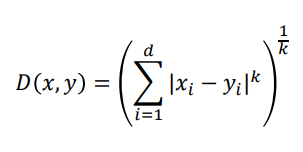

1. Minkowski distance

Minkowski는 k값을 가정하지 않은 공식을 의미하며, 아래는 k값에 따라서 공식의 이름이 달라진다.

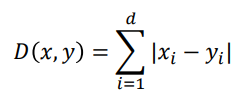

2. k = 1, Manhattan distance

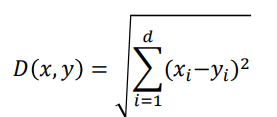

3. k = 2, Euclidean distance

일반적으로 쓰이는 공식은 유클리디언 거리이며, 거리 공식을 명시하지 않았으면 이를 사용했다고 생각하면 된다. 공식을 보면, 흔히 아는 두 점의 직선 거리를 구하는 공식이다.

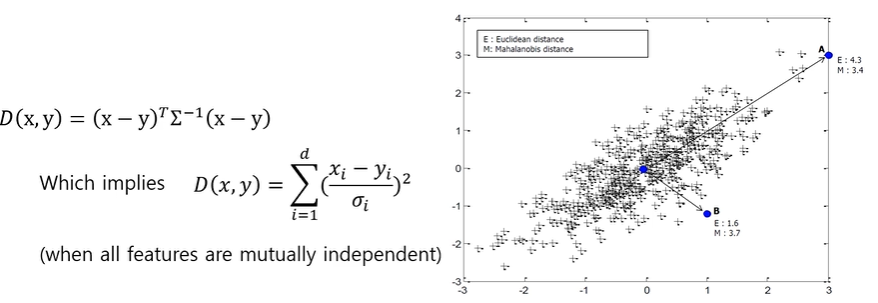

4. Mahalanobis distance

유클리디언 거리는 값들의 크기에 영향을 많이 받는 문제가 있지만, 공분산으로 나누어 정규화를 시켜줄 수 있다. 이것이 마할라노비스 거리이다. 이때 모든 변수들은 상호 독립을 가정한 상태에서 가까운 거리에는 높은 공분산 값을, 먼 거리에는 낮은 공분산 값으로 나누었다. 다시 말하면 평균 50점인 시험에서 70점받은 경우와 평균 80점에서 70점 받은 경우를 하나의 기준으로 다루기 위한 처리라고 생각하면 된다.

5. Distance of categorical features

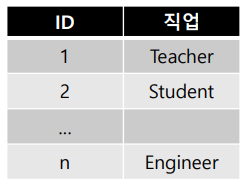

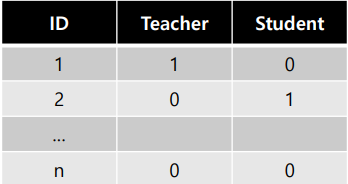

- One hot encoding(One of C coding)

위와 같이 숫자가 아닌 값을 인코딩한다고 생각하자. 각 값마다 넘버링을 하는 것이 아니라 일반적으로는 One Hot Encoding을 사용한다. 아래를 보자.

각 카테고리마다 변수를 생성하여, feature에 해당하는 변수에 1을 나머지에는 0을 저장한다. 위의 예시에서 Engineer는 k번째 ID외에 모든 ID는 0을 가질 것이다. 이렇게 나머지 0, 하나에만 몰아서 1을 할당하므로 One Hot이라는 이름이 붙었다. 이렇게 되면 변수 간의 모든 거리는 루트 2로 항상 같은 값을 유지하지만, 변수의 개수가 많이 늘어나는 단점이 있다. 만약에 이를 사용하지 않으려면, feature를 Ordinal 또는 Norminal 변수와 같이 변환해서 사용해야 하는데 계산하기 아주 복잡하다!

2) Normalization

변수들의 스케일이 다른 경우가 많으므로 왜곡이 언제든 발생할 수 있다. 따라서 정규화를 사용한다. distance를 다룰 때는 반드시 정규화를 사용해야 함을 잊지말자.

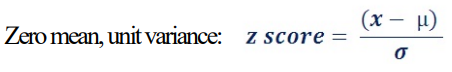

- z-normalization

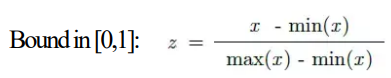

- min-max normalization or scaling

3) k-NN

1. Algorithm

- class label이 있는 training data를 준비한다

- label이 없는 test data를 추가한다

- 모든 training data에 대해 test data와의 거리를 계산한다

- 각각의 test data와 가까운 순서대로 k개의 이웃들을 선택한다

- 이웃들의 label을 확인하여(vote) 가장 많이 나온 label을 test data의 label로 결정한다

2. k의 결정

그렇다면 k의 크기는 어떻게 결정 할까?

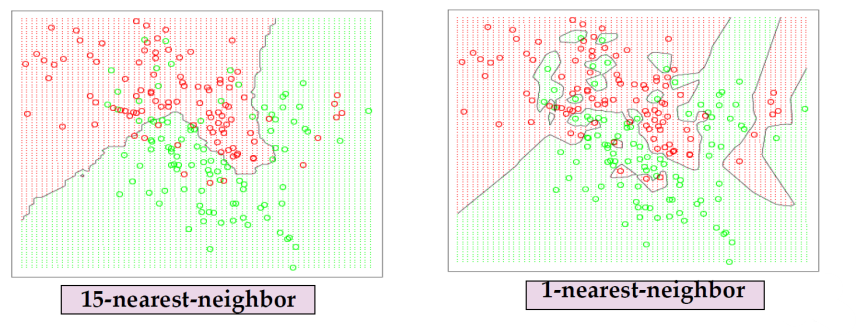

k는 이왕이면 홀수로, 사람이 여러 시도를 통해 적절한 k를 설정한다.k가 크면, smoothing but not sensitive

k가 작으면, complex but too sensitive

3. Lazy Learner

k-NN은 게으른 학습이라고 불리는데 이유는 아래와 같다. 반댓말은 Eager Learner이다.

- no training process : 데이터가 들어오고 나서야 계산함

- reference vectors : 학습모델이라고 하기 애매해서 저렇게 부름

- memory-based reasoning

- time consuming for test process : 평가하는데 시간이 많이 소요, 실시간으로 불가능

4. k-NN Regression

voting 대신 이웃들의 label값의 평균을 구하여 label을 결정한다.

4) Summary

- 가까운 이웃들을 기반으로 예측

- kNN의 최악의 성능이 classification 최적의 2배라고 증명되어있어 - - baseline이 됨, 즉 kNN보다 안좋다? 쓰지마

- 매우 간단함에도, 비선형 문제를 다룸

- Lazy Learning

'🐳Dev > Machine Learning' 카테고리의 다른 글

[기계학습] 7. Decision Tree (0) 2021.12.22 [기계학습] 6. Model Evaluation (0) 2021.12.21 [기계학습] 4. Logistic Regression (0) 2021.12.15 [기계학습] 3. Regression (0) 2021.12.14 [기계학습] 2. Bayesian Classifier (0) 2021.12.13